Introduction

I’ve been contributing to opentelemetry-rust for a while now - an important project both for the Rust community in general, as well as my day job at Datadog. Since being promoted to an approver (🥳), I’ve been spending a lot of time reading other people’s PRs. And as we all know - to review a PR well, you kinda have to understand the code that underlies it, as well as the overall structure of the system it finds itself within.

A central element of this project that took me a while to grasp is Context. This exists in all OpenTelemetry (OTel) projects and serves as the core mechanism for moving observability data along through an instrumented system. It’s also – like all good things in computer science – a heavily overloaded word.

You can read about the OTel sense of the word in the OTel spec (and the related W3C trace context spec) , but the description is, to an OTel newcomer, perhaps a little opaque:

A Context is a propagation mechanism which carries execution-scoped values across API boundaries and between logically associated execution units. Cross-cutting concerns access their data in-process using the same shared Context object.

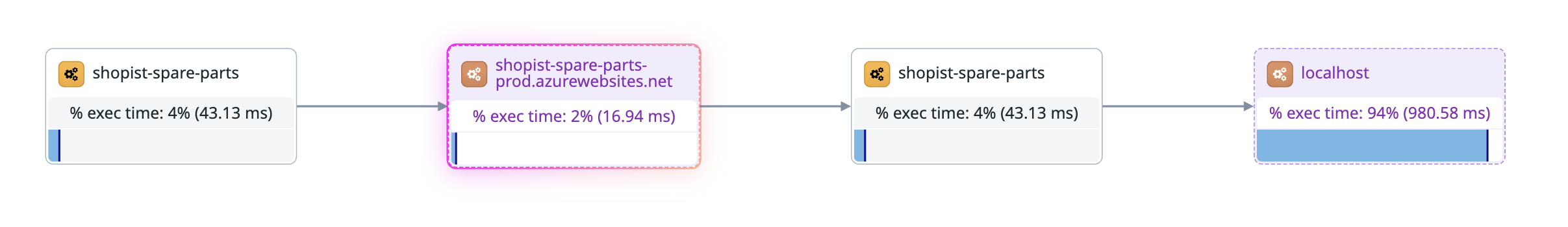

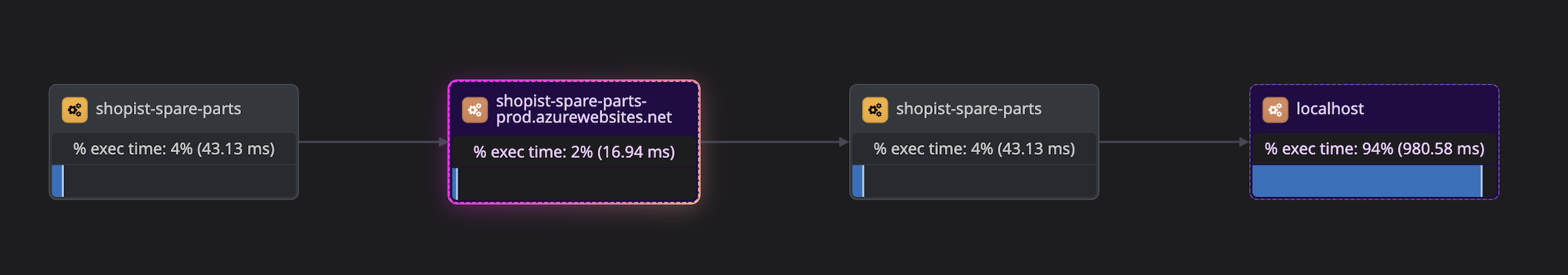

Between services, what we’re aiming for is to be able to do what you see in the image below - graph a request as it flows from service-to-service in our architecture - which is what the W3C specification facilitates by standardising some headers for passing trace information around:

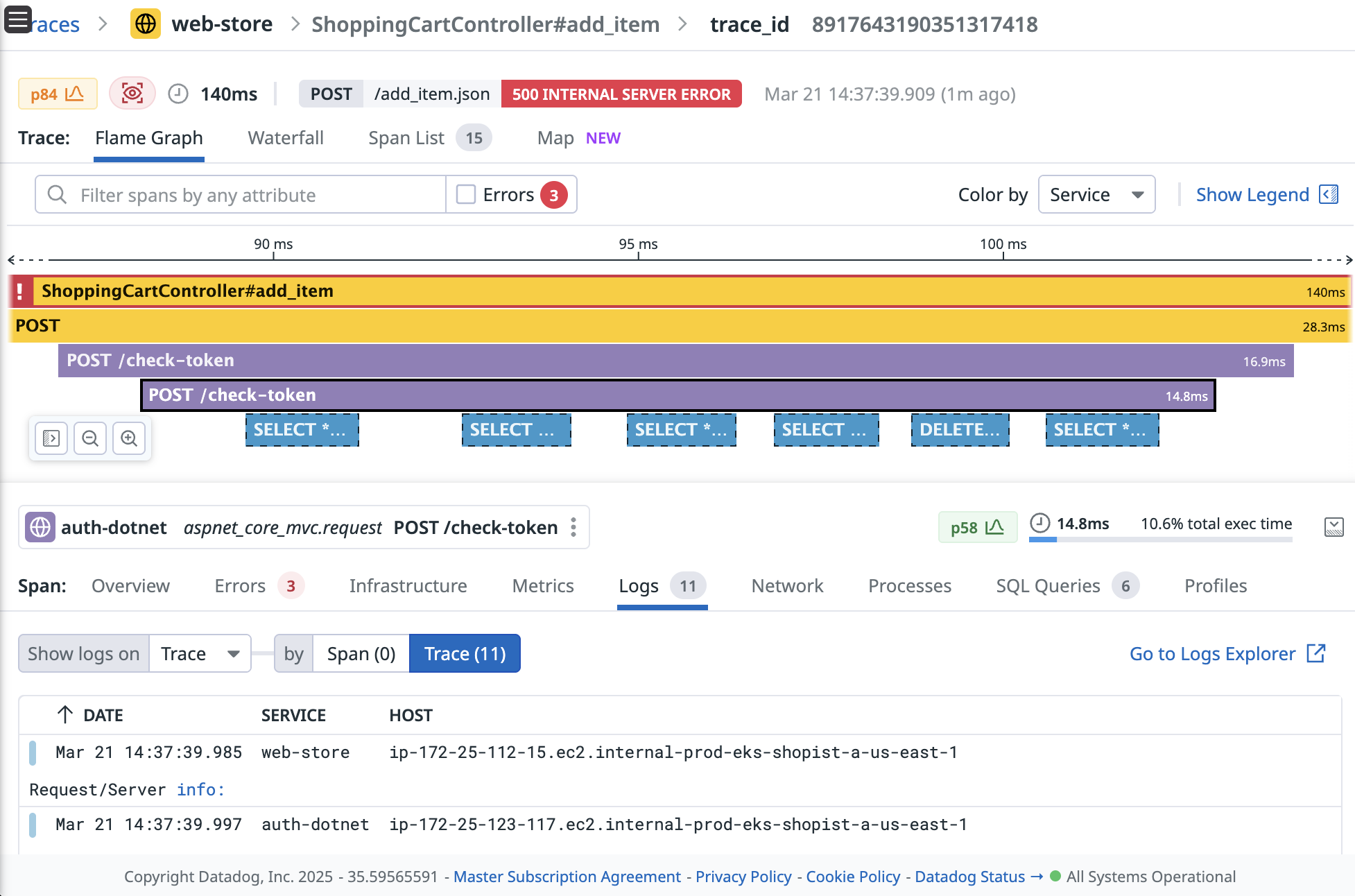

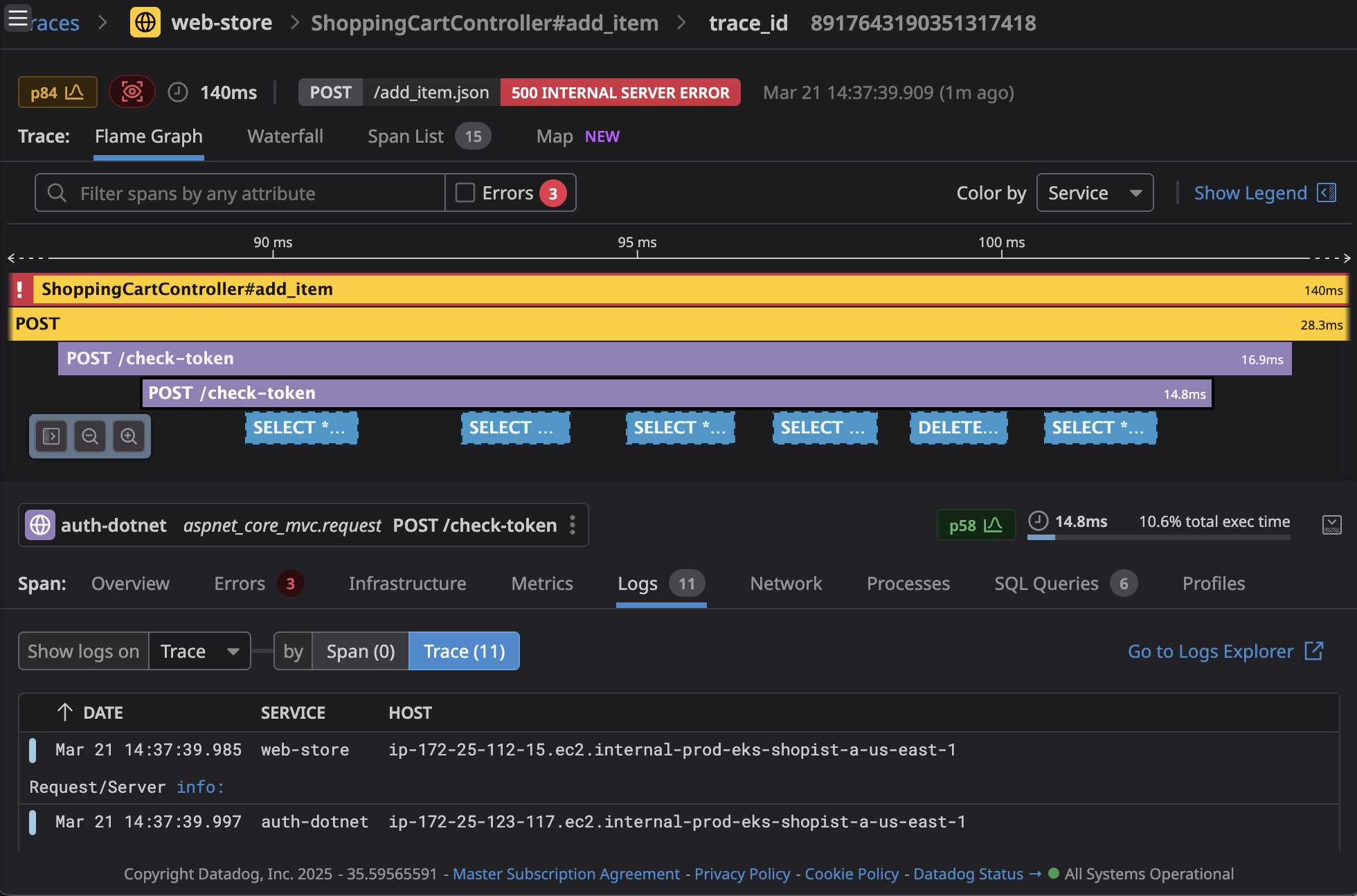

Within a service - we expect to be able to see the flow of a request through its internal components, as well as see where outgoing requests fire off to, and tie all of the other observability signals the code emits in that time up to that request - note that we can see our outgoing SQL requests and log messages, as well as the spans of the request itself:

This article is about the latter - how does OTel, and opentelemetry-rust in particular - manage context as it flows through a service?

Understanding Context

In process, the central purpose of Context is to carry the extra telemetry data we need to make sense of the request alongside it as the request moves through our service, making it available to everything that needs it. This is the mechanism that underlies all the magic correlation - tying a particular incoming request to your service to the logs and metrics it emits as that request is processed, to the outgoing requests it issues to satisfy the request, and ultimately back to the success or failure of the request itself.

In Rust, the Context object looks like this:

#[derive(Clone, Default)]pub struct Context { pub(crate) span: Option<Arc<SynchronizedSpan>>, entries: Option<Arc<EntryMap>>,}Whenever code is running in your application, we can get the current instance using Context::current(), and we can read and mutate those values (although in most cases we won’t do this directly as application developers).

How’s it work?

Imagine a typical REST API — something you’d monitor with OpenTelemetry. Everything kicks off when an incoming HTTP request is received. We want to group all related activities together: logs, outgoing requests, and associated metadata. We can see how Context works by chasing one such request through our system.

Handling an Incoming Request

Looking at one of OTel Rust’s examples, we see that this function is used to extract some information from the incoming request headers:

// Extract the context from the incoming request headersfn extract_context_from_request(req: &Request<Incoming>) -> Context { global::get_text_map_propagator(|propagator| { propagator.extract(&HeaderExtractor(req.headers())) })}And we can see that this function is creating the first Context needed for this request, carrying all relevant details forward. The extractor checks for incoming headers on the HTTP request, extracting contextual information that the incoming request might contain, and writing it into Context. This allows us to track the request across services. Super concretely - this means:

- The

traceparentandtracestateheaders, part of the W3C Trace Context, which lets us build a complete trace of a request that flows across multiple services in our system - Any

baggageheaders, which are used to carry extra, user-defined key-value pairs along with our request

This isn’t an exhaustive list, but it is the main two things. In both of these cases we’re going to need this information accessible to our service, so we establish it as soon as we start handling the incoming request.

Next, we start a span that is logically beneath this parent traceparent, pointing at the parent Context we’ve created by reading the incoming request headers:

let parent_cx = extract_context_from_request(&req);let response = { // Create a span for our work processing the request in this service let tracer = get_tracer(); let mut span = tracer .span_builder("router") .with_kind(SpanKind::Server) .start_with_context(tracer, &parent_cx);

// Create a new context deriving from our parent context, with this span attached let cx = Context::default().with_span(span);At this point, any other processing that happens for this request can look on the Context to see everything it needs to know about the active span, as well as the parent span.

Consuming Context

Now that we have some useful information on Context, let’s see where it goes. Everything emitted (spans, logs, metrics) can be tied back to spans, allowing us to correlate log data with the request being processed.

For example, we could use the Context directly to add an event to the Span - sort of like a span-attached log message:

cx.span().add_event( "Got response!", vec![KeyValue::new("status", res.status().to_string())],);What about regular logging, though? We don’t explicitly pass Context through - we couldn’t even if we wanted to, because rust’s standard log crate has no concept of OTel:

error!(name: "my-event-name", target: "my-system", event_id = 20, user_name = "otel", user_email = "[email protected]", message = "This is an example message");If we go and have a look at SdkLogger - the logger that we “plug in” to the back of the log crate, we can see what happens when it tries to emit a log:

fn emit(&self, mut record: Self::LogRecord) { /// ...

if record.trace_context.is_none() { // Add the trace_context to the log event before we emit it by reading the active Context Context::map_current(|cx| { cx.has_active_span().then(|| { record.trace_context = Some(TraceContext::from(cx.span().span_context())) }) }); }We can see that SdkLogger is grabbing the active Context and pulling the span information it needs to emit as part of the log message from it. Here the value of Context becomes obvious - we can mostly implicitly pass along this state that we need to tie all of our telemetry along!

Outgoing Requests

What about outgoing requests? - we saw at the start how we pull trace headers off of incoming requests, so we’re going to need to make sure any downstream services we in turn call get the same Context to tie things together.

If we stick with the same example app, we can check the propagator to see how it writes out span details from Context some writeable thing - like a HTTP client’s request headers:

// Inject context into the request headerslet mut req = hyper::Request::builder().uri(url);global::get_text_map_propagator(|propagator| { propagator.inject_context(&cx, &mut HeaderInjector(req.headers_mut().unwrap()))});We can use that same propagator from before but rather than asking it to read from an incoming request into a Context, we can ask it to write from a Context into an outgoing request. You can read more about this pattern in the OTel spec in the section on Propagators.

Baggage

If we jump back to the understanding context section, we see that we’ve been using the span field so far, and not touched the entries field. This is because we often just want the span information that tells us the ID of the span we’re processing, so we can emit telemetry referencing that. But Context is a general mechanism for carrying state along - not just span information.

This happens using the entries field, and baggage is a great example of a general-purpose use case that leverages this. It allows us to attach arbitrary key-value pairs to requests, which are propagated across service boundaries, again, using a propagator. Here we see how the baggage propagator derives a new Context by adding baggage from some readable thing - for instance, an incoming HTTP request’s headers:

// Create a new Context by adding in any baggage headersfn extract_with_context(&self, cx: &Context, extractor: &dyn Extractor) -> Context { if let Some(header_value) = extractor.get(BAGGAGE_HEADER) { let baggage = header_value.split(',').filter_map(|context_value| { // Parse key-value pairs... }); cx.with_baggage(baggage) } else { cx.clone() }}Likewise, we need to be able to propagate our baggage onwards into outgoing requests, just like we saw above with the span’s traceparent information:

// Writing Baggage to headersfn inject_context(&self, cx: &Context, injector: &mut dyn Injector) { let baggage = cx.baggage(); if !baggage.is_empty() { let header_value = baggage .iter() .map(|(name, (value, metadata))| { // Format key-value pairs for header... }) .collect::<Vec<String>>() .join(","); injector.set(BAGGAGE_HEADER, header_value); }}Generalizing Context

We can also use Context just like Baggage does, to store arbitrary data. We submit typed elements to the Context object, and we can grab these anywhere in the processing of our request in-process.

// Store a custom value type in Context#[derive(Debug, PartialEq)]struct CatMeme(&'static str);

// Store cat meme in contextlet cx = Context::new().with_value(CatMeme("I can has context?"));

// Later retrieve itif let Some(meme) = cx.get::<CatMeme>() { println!("Found meme: {}", meme.0);}If we needed this extra information to cross service boundaries - over HTTP calls, say - we’d have to implement TextMapPropagator - like spans and baggage do - as well.

This isn’t really something you’d want to use to pass data along you’d use in your business logic - that should be explicit in your service contracts and interfaces - but could be useful for extra observability-related data.

Under the Hood

If we look at Context’s implementation, we see that it fetches the active Context from a thread-local storage mechanism, ensuring each thread has a single instance active at a given time:

thread_local! { static CURRENT_CONTEXT: RefCell<ContextStack> = RefCell::new(ContextStack::default());}And then the Context implementation uses this thread-local variable:

impl Context { // Returns an immutable snapshot of the current thread's context pub fn current() -> Self { Self::map_current(|cx| cx.clone()) }

// Applies a function to the current context returning its value pub fn map_current<T>(f: impl FnOnce(&Context) -> T) -> T { CURRENT_CONTEXT.with(|cx| cx.borrow().map_current_cx(f)) }}For synchronous web servers, this model works well - each request gets a thread, runs to completion, and then a new Context is created for the next request. Whenever we clone a Context object, we can see that we’re cloning the inner Arc wrapping the entries and span information. When all clones of a particular Arc are dropped, for instance, because we’re doing working on the request that created the surrounding span - the span itself is dropped, and the Drop trait implementation it provides submits its data off to whatever external observability tool we are using.

What about async?

You’re probably wondering: If I use Tokio, how does this work when requests jump across threads? Doesn’t it all get horribly mixed up? Yes! This does makes things a bit harder.

FutureExt

To be a bit more precise, async code doesn’t jump furiously across threads just whenever - it gets suspended when it hits a yield point - something that does some blocking operation that yields a Future the runtime will pick up and resume later. This could be making a call to an external HTTP service, or calling tokio::sleep:

async fn do_something() { // This code runs on one thread let result = some_async_call().await; // Execution can switch to another thread here // Continuing execution might be on a different thread}This is why async works well. Instead of blocking a thread while we wait for the outside world, we yield it back to the runtime so it can execute other work, resuming only when needed. When we resume execution, we might be on a different thread, and even if we’re not, something else may have run on our thread since we did. This means the active Context is almost certainly wrong, and we need to restore the correct one for the piece of work we’re now running.

To do this in Rust, we rely on FutureExt, which allows us to attach Context to a future, ensuring it’s preserved across execution boundaries. In practice this means whenever we do something yielding, we have to explicitly “wrap up” the resulting future - we can see this in our example app:

match (req.method(), req.uri().path()) { (&hyper::Method::GET, "/health") => handle_health_check(req).with_context(cx).await, (&hyper::Method::GET, "/echo") => handle_echo(req).with_context(cx).await // ...};Here, handle_health_check and handle_echo are both async, meaning they return a future. We then use with_context to wrap them up, and if we peer into the wrapper type it resturns, we see that it automatically re-attaches the correct Context when the future is polled:

fn poll(self: Pin<&mut Self>, task_cx: &mut TaskContext<'_>) -> Poll<Self::Output> { let this = self.project(); let _guard = this.otel_cx.clone().attach();

this.inner.poll(task_cx) }Wrapping up

There is a bit more to Context than initially meets the eye - but I hope this has given you an intuition for how it works! If you want to know more, or squash some bugs, hope on over to opentelemetry-rust on GitHub.