Overview

If you prefer videos, I spoke with my Datadog colleague Jason Hand about this for his AI Tools Lab - check it out here.

Like just about everyone else with a keyboard and a programming habit, I’ve been playing around with coding assistants like cursor, Claude Code and the ol’ copy-paste-into-chatGPT for a while now. I find they work well and save time in some circumstances - namely, anything that could be described as intelligent copy paste. Have an API spec and want to build out the DB models, DTOs, and ORM configuration? Written a nice interface and an implementation or two of it, and want to duplicate it for some variants? Perfect - let it rip. The bounds of the problem are well understood, and you feel like you could almost get away with fancy templates and some regexp, but not quite.

On the other hand, I am pretty skeptical of what we have access to today for building anything kinda chunky. Or, anything where I don’t already know what “good” looks like. I figure because these things are so overwhelmingly fallible, you need to be able to look at the output, grok it, and give it a 👍 or 👎. Because programming is theory building, if you’re not intimately involved in the construction of the program, it is very hard to assess whether or not the thing is good or right, because you’ve not been forced to build up the mental model to make that judgement.

So I plod along, with smatterings of carefully curated Claude Code output popping up here and there in otherwise human-written changes. But, I realised I might just be leaning into my own existing biases here so I thought - what if we push this way beyond its sensible bounds, and see where we land?

The Experiment

I’ve used path tracers - essentially ray-tracing without the shortcuts - as a toy problem to sink my teeth into new programming languages for a while now. You can find plenty of descriptions of the algorithms and samples on the net and it’s pretty easy to build a basic one but they have reasonably complex data modelling needs, you need to build them to run concurrently if you want them to ever finish, and there’s enough wild variants that you can go as crazy as you want making them more and more fancy.

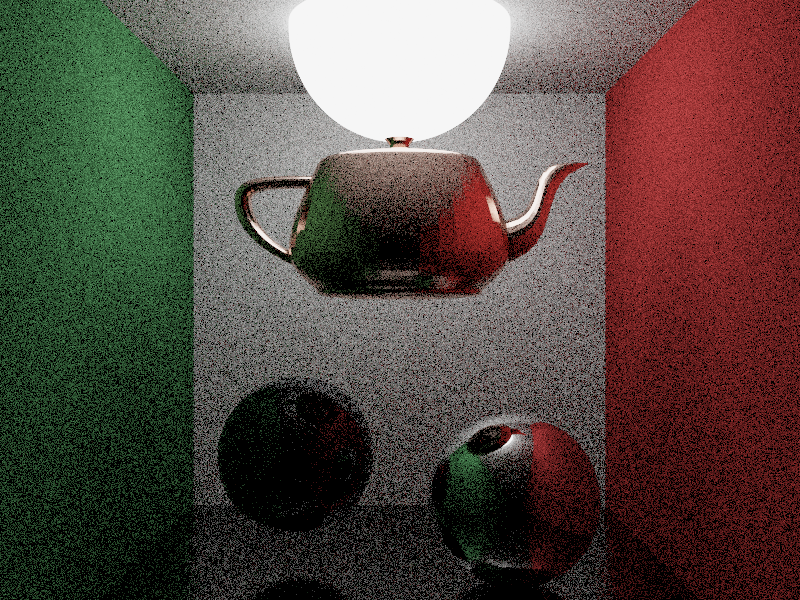

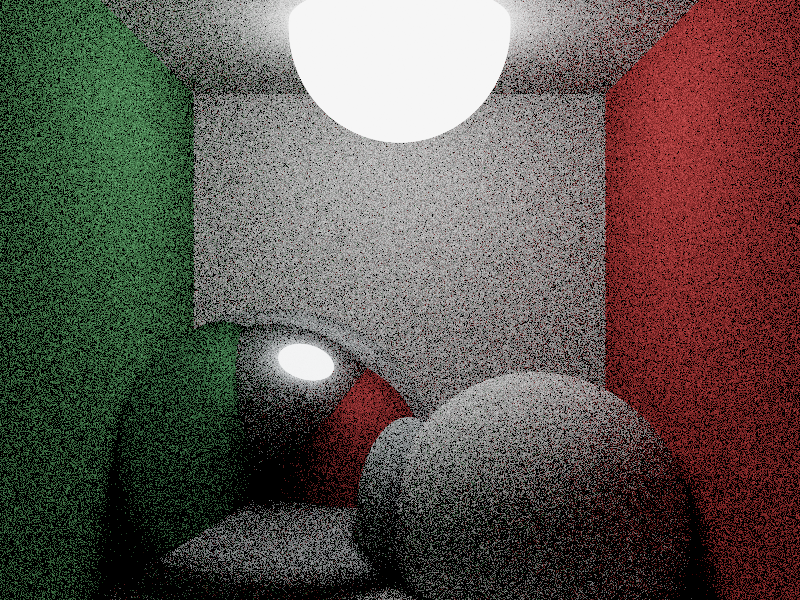

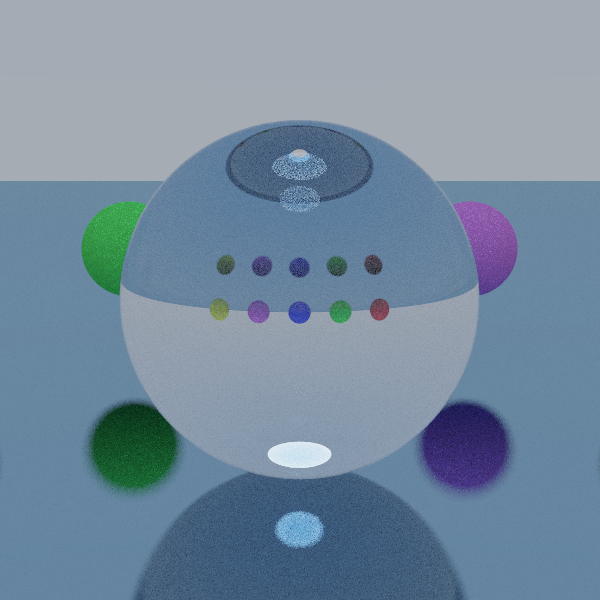

Also, you get to render shiny-noisy pictures of reflective teapots, which I at least find satisfying.

So I figured - this is a great toy thing to test the limits of agentic coding. I can give the model high level instructions, let it rip, and see how well it does. How far we can get with a chunky problem with minimal intervention?

The Setup

I used Claude Code for this 1, working with it to build out a CLAUDE.md file with some boundaries. I set some technical constraints: Rust with Rayon for parallelism, path tracing with Monte Carlo integration, right-handed coordinate system. I also established some guard-rails as a mechanism for self-reflection - run cargo test after every change, use cargo bench benchmarks to catch and debug performance regressions, that sort of thing.

So then, a maximum hands-off approach: work through a project plan module by module (core data structures, camera, scene geometry, light interaction models), with me taking an extremely casual look after each task - does it build? Are there more files? Did it add directories that sound serious? If yes, commit and move on until we get to a point where we can actually render something.

So, how’d it go?

Kinda surprisingly plausible? Mostly! With some properly awful code mixed in to taste. Having said that - this wrote so much code without any intervention it’s at a point where I can’t really confidently pick up what it’s done and run with it without digging through all 5k SLoC by hand and convincing myself it’s ok-ish. Realistically I wouldn’t want to be in this position with a serious code base.

Having got that off my chest - let’s look at some renders; note these’ll mostly be pretty noisy, because I don’t want to leave them running for days rendering:

Renders

A teapot, in a cornell box - you can see some nice reflection and soft shadowing:

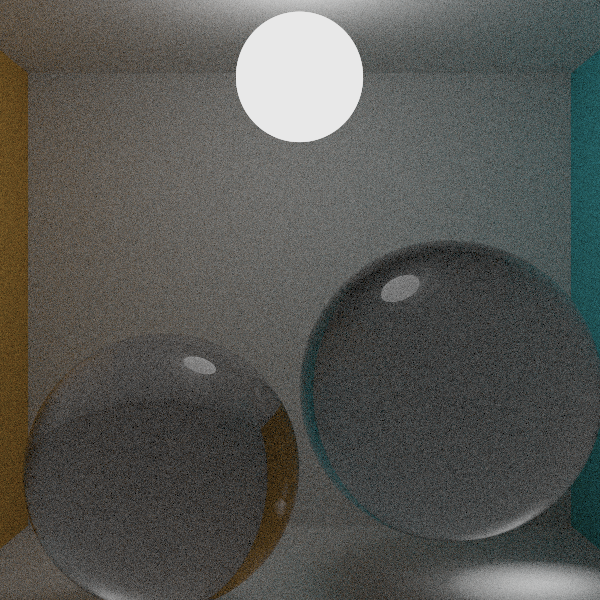

Some spheres in a cornell box - the colour cast from the path tracing on the right is evident:

A refractive sphere, showing a nice caustic focus spot on the ground underneath; the top looks a bit “off”:

Some glass spheres - these look about right; I think if I’d re-render them with higher SPP and max depth and they’d be spot on:

Code

When I did eventually look at the code at the end, there were some … fun surprises. Here are some of my favourites.

Global Static Mutable Not-Random

I think this one speaks for itself; at least the comment is self-aware.

/// A simple function to generate a random axis (0, 1, or 2)/// We'd normally use a proper random number generator,/// but for simplicity we'll just use a deterministic patternfn rand_axis() -> usize { static mut COUNTER: usize = 0;

unsafe { COUNTER = (COUNTER + 1) % 3; COUNTER }}Exciting and unecessary Mutex

This one is pretty shocking; I noticed that although the raytracer pinned the CPU at 100% (good!) it was 95% system time (bad!) and went hunting. This is called every time a pixel is completed, and takes a lock on a single program-wide mutex to update a counter. This is … awful, and rewriting it to not do that made everything dramatically faster. It’s also something that’s kinda subtle - the program still works, it’s just slow.

impl SharedProgress { /// Create a new shared progress reporter pub fn new() -> Self { Self { reporter: Arc::new(Mutex::new(ProgressReporter::new())), } }

/// Update progress pub fn update(&self, progress: f64) { if let Ok(mut reporter) = self.reporter.lock() { reporter.update(progress); } }}Self-Intersection

This one is really subtle. This code is responsible for bouncing a new ray out from an intersection point. It looks about right, right? It’s missing an important bit, though - you need to offset the start point of the new ray a tiny bit along the new direction of travel, because if you don’t, sometimes, it will re-intersect at the same point. This leads to weird artifacts in your images. You’d only find this if you dug through all the code and knew that this bit was missing!

// Get the direction from the Bxdflet (scattered_dir, _pdf, _) = dielectric.sample(-ray.direction, normal, u1, rng.gen::<f64>());

Some(ScatterResult { scattered_ray, attenuation: self.color,})Nice Traits

For things that are pretty well documented on the internets, it’s done quite a good job; both of these traits look about right to my casual path-tracing eyes, and as its built its code around them, this is rather helpful:

/// Trait for Bidirectional Reflectance Distribution Functions (BRDFs)/// These determine how light is reflected from surfacespub trait Brdf: Debug + Send + Sync { /// Evaluate the BRDF for the given directions /// Returns the amount of light reflected from wi to wo /// This should be physically plausible and energy-conserving fn eval(&self, dirs: &BrdfDirections) -> Vec3;

/// Sample a direction for the given outgoing direction and normal /// Used for Monte Carlo path tracing to importance sample reflected directions /// Returns the sampled direction and the associated PDF fn sample(&self, wo: Vec3, n: Vec3, u1: f64, u2: f64) -> (Vec3, f64);

/// Calculate the probability density function for the given directions /// This should be consistent with the sample function fn pdf(&self, dirs: &BrdfDirections) -> f64;}Tell me what you really think

Overall I’m pretty impressed with how far it got, but I think without spending a bunch of effort pushing it in the right direction it would’ve been rubbish; I don’t think you can really get good outcomes for complex-ish problems today without understanding the problem yourself. Maybe this isn’t surprising?

… I also think that taking it to this extreme is an awful idea; it’s much better to run short, tight feedback loops with the agent, “iteratively building together”; for me at least, this often saves a bunch of time tapping away at the keyboard.