Infrastructure from code

/ 12 min read

Infrastructure as code has been an undisputed success. As an industry, we’ve dragged ourselves up the abstraction ladder from hand-rolled servers, through procedural infrastructure APIs and low level declarative APIs, to modern tools like Pulumi and AWS’ Cloud Development Kit (CDK). These days we can write reusable constructs using our favourite programming languages (“give me a load-balanced, autoscaling, serverless container orchestrated deployment of this Dockerfile please”) that allow us to share and re-use common patterns within teams and the industry.

Today I’d like to have a look at a handful of tools that take a new approach, tentatively named infrastructure-from-code. The idea is to derive infrastructure automatically from the code of the application, rather than having to build it out using a separate language/stack/team/technology. In some cases this is an extension of an existing infra-as-code tool (Pulumi), in others, it is a whole new cloud-first language. There’s a great introduction to the idea on the shuttle blog.

What I’m looking for

I want to be able to write cloud applications using a single codebase to describe both the infrastructure and the business logic, and have the tool explode that out into an idiomatic cloud native deployment. Here’s some queues, topics, and a map-reduce step, here’s some business logic that runs in the different places - go deploy that. Extra points if it runs and debugs locally like a normal process!

What’s out there?

I’m going to dive into four different tools in this post - shuttle, wing, pulumi and encore. Code I’ve written as part of this blog can be found on my github.

Shuttle.rs

Shuttle lets you lightly annotate your existing rust web API with the infrastructure pieces it needs. Shuttle’s deployment process then uses this information to work out what to provision. It currently runs on their own services, on top of AWS, but has historically worked self-hosted generating terraform too - it looks like this will return at some point, but for now you have to let them host it for you.

In terms of support, you get a bunch of rust web frameworks including actix, rocket, and axum. On the cloud resources side, you can provision SQL databases, file storage, and secrets.

To get a handle on a resource, you provide it to the entry point for your framework of choice, like this with axum:

#[shuttle_runtime::main]async fn axum( #[shuttle_shared_db::Postgres] pool1: PgPool, #[shuttle_shared_db::Postgres] pool2: PgPool, #[shuttle_static_folder::StaticFolder(folder = "src")] static_folder: PathBuf, #[shuttle_static_folder::StaticFolder(folder = "testdata")] test_data: PathBuf) -> shuttle_axum::ShuttleAxum {

let router = Router::new() .route("/hello", get(hello_world)); //.route("/staticpath", get(|| async { path }));

Ok(router.into())}… and then run cargo shuttle deploy. Even though I’ve plugged in two different PgPools, I’ve only ended up with one database provisioned - the providers feel more like “opt in” extras than IaC would - I can’t use it to describe some arbitrary configuration of cloud building blocks, just turn the supported integrations on and off.

Diving deeper on the runtime

Let’s dig around the running application to see how the Shuttle team is hanging things together.

First, if I get my app to try and pull the instance metadata endpoint from AWS, I get a 401, which is probably a good sign!

2023-05-16T19:28:09.937898926Z DEBUG ureq::unit: response 401 to GET http://169.254.169.254/latest/meta-data/instance-idIf we look at /proc/self/cgroup, we can also see that we’re running in a container:

11:hugetlb:/docker/b96118b2dc63ab652f11e3b24bc9a3b1c3e712e658f91d6cb060274e9ccf91a310:perf_event:/docker/b96118b2dc63ab652f11e3b24bc9a3b1c3e712e658f91d6cb060274e9ccf91a39:pids:/docker/b96118b2dc63ab652f11e3b24bc9a3b1c3e712e658f91d6cb060274e9ccf91a3....This feels a bit light for the moment. My pathological case - an event driven system - couldn’t be built in a cloud-native fashion with shuttle, as the serverless primitives are missing entirely - rust is deploying a container API backend, and plugging DBs and caches in as requested. Having said that, this would be great for simple web APIs in organisations that have invested in rust, and is one I will be keeping an eye on.

Wing

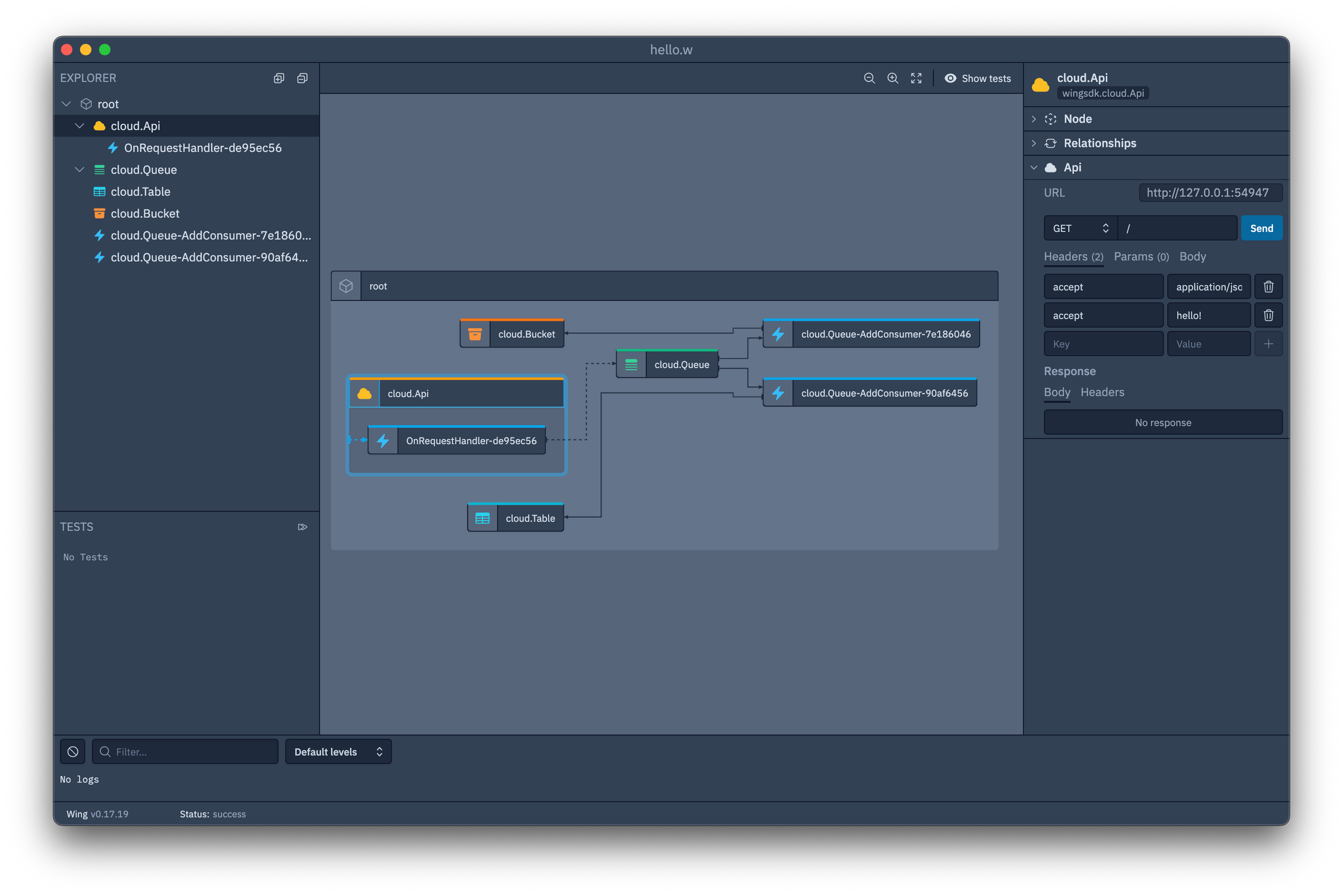

Wing is the most ambitious of the bunch, inventing a whole new language to treat the cloud as one big programmable machine. It aims to abstract away the details of the different providers by implementing various backends - at the moment, tf-aws compiles your program down to terraform modules targeting AWS, and work is being done on Azure and GCP terraform backends too. In addition to running on actual clouds, wing comes with its own local simulator, and a vscode plugin which seems to give basic intellisense and syntax highlighting for the language. Because the set of things it supports is much less than your typical hyperscaler provides, the surface area the resource model needs to support is much smaller - it feels like a set of pieces focussed on modern serverless development - queues, buckets, static websites. It is clearly focussed on a serverless deployment style and fits better with the event driven system case I introduced earlier.

There are very few examples, the vscode plugin’s autocomplete is not very complete, and the SDK documentation doesn’t seem to always line up with what I can actually run, which makes it quite un-ergonomic at the moment to get working code out. Nonetheless, when you do it is quite succinct and clear - here’s a simple app I wrote that takes an API request, pushes it to a queue, then stores the request into S3 and DynamoDB:

bring cloud;

// API requests get pushed to a queue ...let api = new cloud.Api();let queue = new cloud.Queue();api.get("/", inflight(req: cloud.ApiRequest): cloud.ApiResponse => {

let data = Json.stringify(req); log(data); queue.push(data);

return cloud.ApiResponse { status: 200, body: "Thanks for the request!" };});

// And then taken from the queue and stored into a table, and an S3 bucketlet table = new cloud.Table(cloud.TableProps{ name: "requests", primaryKey: "id", columns: { "request_body": cloud.ColumnType.STRING }});let bucket = new cloud.Bucket();

// Update the bucketqueue.addConsumer(inflight (message: str) => { bucket.put("lastrequest.txt", message);});

// Update the tablequeue.addConsumer(inflight (message: str) => { table.insert("lastrequest", message);});You can use the local simulator to get a nice visualization of it, and inject messages and API calls into the different interfaces. Not everything works here yet - you can’t poke around in tables, for instance - but what does is very pleasant to use:

From here, I run wing compile --target tf-aws hello.w … and get an error that seems to be resolved by removing one of the 2 queue handlers For the sake of moving forward, I do this, and then push to AWS with terraform init && terraform apply to see what we end up with.

The Lambda functions have clean, narrowly-defined roles on them - the API lambda to push to the queue, and the queue consumer to pull from the queue and PutObject to the bucket. The Lambdas are each a single, minified JS file, which looks to have been transpiled from typescript. The DynamoDB table, API Gateway, and S3 bucket are likewise as you’d expect. If I hit the API endpoint I end up with an object in my bucket, just like in the simulator.

Overall, I see lots of potential here - when it works, the experience is great - and its all serverless! I suspect that getting the language support there - both in terms of documentation, tooling integration, and public acceptance - will be the biggest hurdle. The main resources you need are already there. The tooling seems to support sprinkling typescript through the projects, which unlocks the whole JS/TS ecosystem, but users will need to be convinced that this isn’t all just leading to a massive tower of lock-in to a company and toolchain that is, at the moment, relatively unknown.

Pulumi

Pulumi, like AWS’ own CDK, is a tool that lets you write infrastructure-as-code in a regular language of your choice - typescript, java, and so on - rather than template style, as with Cloudformation. This tends to be much easier to work with - you get regular programming tools to build abstractions (and can profit from those built by others), much better type checking, and benefit from what amounts to a lot of “automatic best practices”.

Within this world, it turns out that using Pulumi, you can provide the code for Lambdas inline - in the infrastructure definition itself. Normally you’d point to some application bundle elsewhere - a dockerfile or image, a zip, that sort of thing - with the extra tooling and duplication that entails. With Pulumi you can do things like this:

let bucket = new aws.s3.Bucket("mybucket");bucket.onObjectCreated("onObject", async (ev: aws.s3.BucketEvent) => { // This is the code that will be run when the Lambda is invoked (any time an object is added to the bucket). console.log(JSON.stringify(ev));});They call this variously magic functions or function serialization and it seems to be implemented as a preprocessor over the top of the regular Pulumi model. As with wing, this lets you build out serverless workloads neatly, in one place, with one definition of both stack and code. Here’s the example I wrote in Wing from above - HTTP API requests are pushed to a queue, and then from the queue one worker writes them to a bucket, and one to a table:

import * as pulumi from "@pulumi/pulumi";import * as aws from "@pulumi/aws";import * as awsx from "@pulumi/awsx";import * as apigateway from "@pulumi/aws-apigateway";

import { randomUUID } from "crypto";

// Create an S3 bucket and a dynamoDB table we'll write our// data to.const bucket = new aws.s3.Bucket("my-bucket");const table = new aws.dynamodb.Table("my-table", { attributes: [ { name: "id", type: "S" }, ], hashKey: "id", billingMode: "PAY_PER_REQUEST"})

// Create a queue and handler that'll do the actual writingconst queue = new aws.sqs.Queue("my-queue", { visibilityTimeoutSeconds: 200});

// Event handler to write to the bucketqueue.onEvent("message-to-bucket", async (msg) => { let rec = msg.Records[0]; let id = rec.messageId;

const s3 = new aws.sdk.S3(); await s3.putObject({ Bucket: bucket.bucket.get(), Key: id, Body: rec.body }).promise();}, { batchSize: 1});

// Event handler to write to the tablequeue.onEvent("message-to-table", async (msg) => { let rec = msg.Records[0]; let id = rec.messageId;

const ddb = new aws.sdk.DynamoDB.DocumentClient(); await ddb.put({ TableName: table.name.get(), Item: { id: id, request: rec.body } }).promise();

}, { batchSize: 1});

// create an API to hit to write to the queueconst api = new apigateway.RestAPI("api", { routes: [ { path: "/", method: "GET", eventHandler: new aws.lambda.CallbackFunction("api-handler", { callback: async (evt: any, ctx) => { const sqs = new aws.sdk.SQS();

await sqs.sendMessage({ QueueUrl: queue.url.get(), MessageBody: JSON.stringify(evt) }).promise();

return { statusCode: 200, body: JSON.stringify({ "message:" : "Hello, API Gateway!", })} } }) } ] });

// Export the name of the bucketexport const bucketName = bucket.id;export const apiUrl = api.url;This is quite cool! You have all the power of Pulumi - which is to say, you can provision most anything in your cloud of choice, already - and you can sprinkle in inline functions where you need, all using a regular programming language, with well-established editor integration. In the editor I get all of the niceties I expect in terms of spelunking and autocompleting through both the IaC code and the nested magic lambdas. Wing has the edge with local development, though - the simulator and the ability to inject messages and requests immediately, locally - isn’t something pulumi can compete with (however, I’ve noticed there’s some pulumi-LocalStack integration to check out).

Encore

Encore is similar to shuttle - it is built around a single established language, in this case golang rather than rust, lets you use existing mechanisms within the language to define your API, and supports a cultivated subset of cloud resources. In this case we get cache, database, pub/sub, and an SQL database. Also like shuttle but unlike Wing/Pulumi, compute is provided by container. API endpoints are discovered by magic comment - here’s their hello world example:

//encore:api public path=/hello/:namefunc World(ctx context.Context, name string) (*Response, error) { msg := "Hello, " + name + "!" return &Response{Message: msg}, nil}Again like Shuttle, you get a development console of their own creation to deploy to for development and testing, but they support and encourage you to use either AWS or GCP for production.

Unfortunately I’ve spent too much time on this post and will have to come back to Encore at a later date 😄

Wrapping Up

There’s a real split between these 4 tools - existing language vs. annotation, and containers vs. serverless. I wrote at the start that I am quite biased for the serverless options here - I like the idea of being able to treat the cloud as a big, programmable computer, and both wing and pulumi let me do this, whereas shuttle and encore focus on deploying a more traditional backend and bolting some state stores around it.

If I were to pick something to use, today, to make my life easier, I’d start using Pulumi. I often build small demos that show off some orchestration of components within a cloud, where the runtime code itself tends to be very short. This is perfect for that - it’s a well-established tool, you can deploy anything you want with it, and you can simply stick your code inline and forget all the extra things you need (“how do I bundle typescript for lambda again and where are my event models?”).

I am not fully convinced on the value proposition of Shuttle and Encore at the moment. I like these small focussed tools - and these really feel like they’ve been inspired by the glory days of Heroku. But - my experience has been that for bigger systems, you need some easy trapdoors to get out and start defining more than what their core abstractions want to provide. I don’t see how well that can bolt on here. There’s also a bit of a chicken-and-egg thing going on - to tightly couple your infratructure deployment onto a relatively small, new tool - you have to put a lot of trust in it. I am nevertheless excited to see where the teams take these - it’s early days for IfC and its hard to know what’s going to work out best.

Finally - wing! It’s very ambitious - and in a more general way than just “better IaC”. Using the simulator to go and poke messages and functions and APIs as you edit the code - it almost feels like Smalltalk/Pharo. I think its clear that we as an industry have left a lot behind in terms of developer experience over the years, especially as we’ve pushed most everything into the cloud, and projects like this that aim for something more are unambigiously what we need.