Another day, another postIt note pushed dramatically from one side of the productivity whiteboard to the other. Although your industry has become perversely obsessed with the performance art of the agile excuse - sentences beginning with “I know this task was only 2 story points, but …” - this one was actually straightforward. You’ve spent a bunch of time building with Java & Quarkus, and this proof-of-concept ticket was an easy one to knock out. Nonetheless, in the dual spirit of the language-and-framework battle royale and providing a reasonable example for the more junior developers in your team - who don’t all have the same experience - a concrete walkthrough of how your target architecture could work. You put your thoughts down in notes.

But first, why Quarkus?

… your junior, less-enlighted coworkers ask. “Well”, you begin, sighing deeply to underline the extensive experience you are about to allude to:

- It benefits from Java’s broad, performant ecosystem, but is unencumbered by its long history.

- It’s not Spring, you don’t need an EJB container, and you don’t need to give Oracle any more money if you don’t want. Larry’s doing fine.

- Container first, but can run on serverless too. It does clever things like compile-time dependency injection, and has first class support for async programming. It is fast, and cares about having a tiny startup time and memory footprint.

- It has a broad palette of extensions, that let you with a single

quarkus extension addquickly bolt external services onto your app in an opinionated fashion. Want a PostgreSQL database? Here! Also - a container running it will be automagically wired into your developer’s local environments when they launch the service. - Can be compiled to Graal out-of-the-box, a fancy tool for building native binaries out of Java applications, which gives us a lever to make things “native fast” without much effort

You paste this into the team slack, and await the inevitable stream of :thumbsup: and :cooldoge: emojis to follow.

You wait some more. Perhaps long-winded bulleted lists are not the way to win the hearts and minds of the people? I guess

you’ll need to actually show them what to get excited about.

Bootstrapping

You’ve not started a new Quarkus project for a while, so you jump over to the getting started page to make sure your environment you’ve got all the shiny new things:

# Install Quarkuscurl -Ls https://sh.jbang.dev | bash -s - trust add https://repo1.maven.org/maven2/io/quarkus/quarkus-cli/curl -Ls https://sh.jbang.dev | bash -s - app install --fresh --force quarkus@quarkusio

# Create our appquarkus create app passes-java

# Looking for the newly published extensions in registry.quarkus.io# -----------## applying codestarts...# 📚 java# 🔨 maven# 📦 quarkus# 📝 config-properties# 🔧 tooling-dockerfiles# 🔧 tooling-maven-wrapper# 🚀 rest-codestart## -----------# [SUCCESS] ✅ quarkus project has been successfully generated in:# --> /Users/scott.gerring/Documents/code/systems-to-services/passes-java# -----------# Navigate into this directory and get started: quarkus devAt this point, we actually have a running, hot-reloading developer environment! We can simply run

quarkus dev to get started:

Listening for transport dt_socket at address: 5005__ ____ __ _____ ___ __ ____ ______ --/ __ \/ / / / _ | / _ \/ //_/ / / / __/ -/ /_/ / /_/ / __ |/ , _/ ,< / /_/ /\ \--\___\_\____/_/ |_/_/|_/_/|_|\____/___/2024-11-26 10:32:21,234 INFO [io.quarkus] (Quarkus Main Thread) passes-java 1.0.0-SNAPSHOT on JVM (powered by Quarkus 3.16.4) started in 0.934s. Listening on: http://localhost:8080

2024-11-26 10:32:21,236 INFO [io.quarkus] (Quarkus Main Thread) Profile dev activated. Live Coding activated.2024-11-26 10:32:21,236 INFO [io.quarkus] (Quarkus Main Thread) Installed features: [cdi, rest, smallrye-context-propagation, vertx]

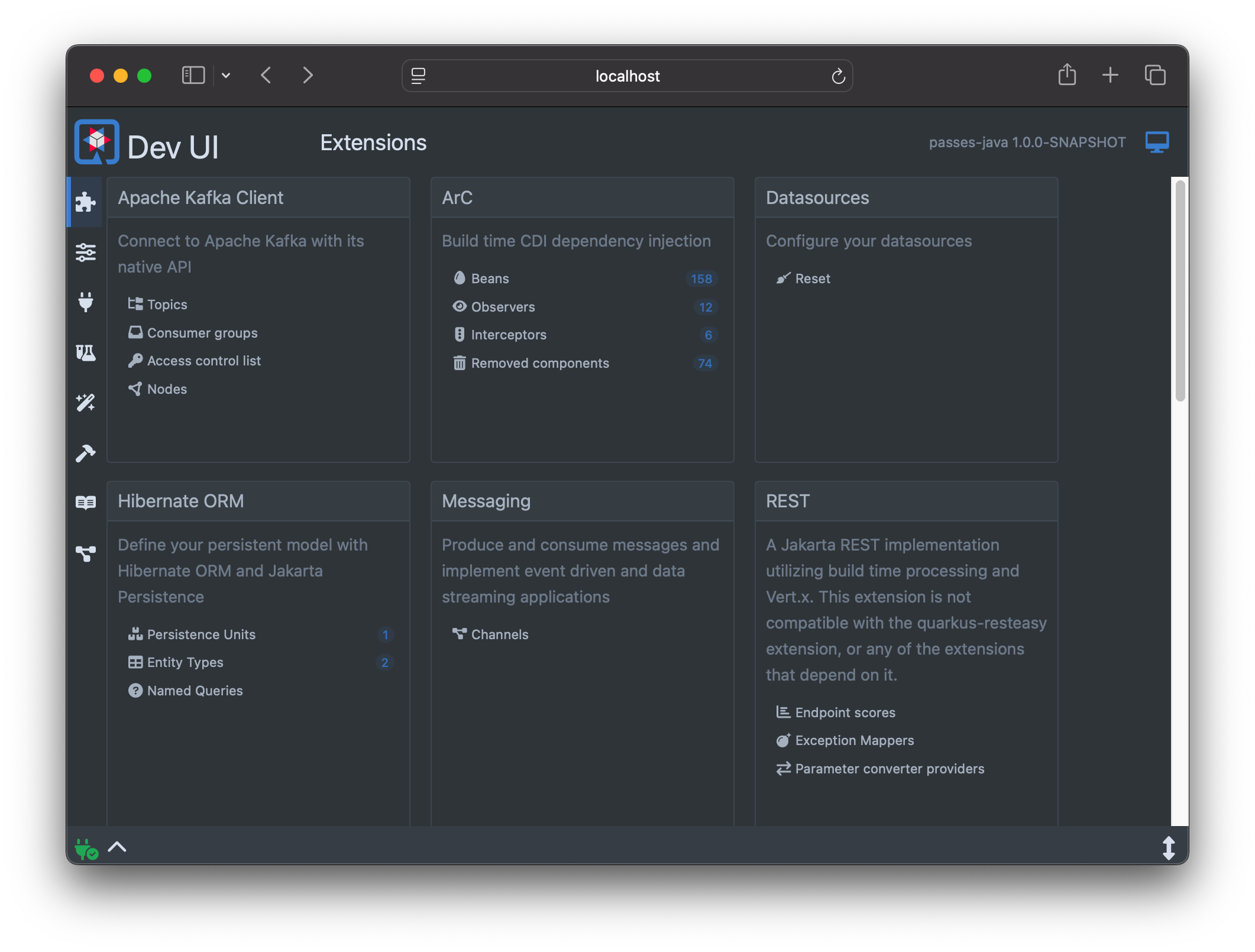

--Tests pausedPress [e] to edit command line args (currently ''), [r] to resume testing, [o] Toggle test output, [:] for the terminal, [h] for more options>Connecting to http://localhost:8080, you even get a jazzy Dev UI that looks like it’ll come in handy when there’s a bit

more going on in your apps. ORMs! Messaging! Beans!

From the Home tab, you can see that you’ve got a /hello endpoint. The code looks like this:

package org.acme;

import jakarta.ws.rs.GET;import jakarta.ws.rs.Path;import jakarta.ws.rs.Produces;import jakarta.ws.rs.core.MediaType;

@Path("/hello")public class GreetingResource {

@GET @Produces(MediaType.TEXT_PLAIN) public String hello() { return "Hello from Quarkus REST"; }}Super straightforward, right? And we’re even using the standard jakarta.ws annotations to define our handlers; there’s

nothing quarkus-specific here.

Adding external services

You know you’re going to need integration with PostgreSQL, Kafka, OpenTelemetry, and that OpenStreetMaps upstream API. You also know that you want to everything to be async/reactive where possible. Diving through the onboarding guide, you find what you need

- configuring a reactive datasource for reactive Postgres

- An integration with SmallRye for reactive Kafka

- The OpenTelemetry extension, rather unsurprisingly, for OpenTelemetry

- The quarkus-rest-client extension, once again very surprisingly for a HTTP client

In each cases, a simple quarkus extension add {...} from the CLI updates your app. Remembering panache -

a simplified ORM wrapper - and your undying focus on developer experience, you quickly bolt this on too, to provide a simplified ORM layer.

Next, having let the framework frame out the shape of your service, you get into writing some actual code …

Actual coding!

The Database bit

You start by defining your data model, so that you have something to bind back to your REST API. As you’ve picked Panache, this is super straightforward, and very similar to the ORM mapping attributes you’d see in most other languages:

@Entity@Table(name = "passes")public class Pass extends PanacheEntityBase {

// We extend PanacheEntityBase, so we can customize ID, so we can use a linear sequence generator. // This makes testing easier, as our IDs don't jump around! @Id @GeneratedValue(strategy = GenerationType.SEQUENCE, generator = "passes_seq") @SequenceGenerator(name = "passes_seq", sequenceName = "passes_SEQ", allocationSize = 1) public Long id;

@Column(name = "name") public String name;

@ManyToOne(fetch = FetchType.EAGER) @JoinColumn(name = "country_id", nullable = false) public Country country;

// .... and so on ....}Probably actually your granddad's ORM mapping; some things are timeless.

It occurs to you that you probably want some sample data, so you take advantage of quarkus’ import.sql feature

to drop some sample data in; not very “production ready” - you’d need to use something like flyway

for that - but perfect for knocking out a PoC.

INSERT INTO country (shortcode, name) VALUES ('CH', 'Switzerland');INSERT INTO passes (id, name, country_id, ascent, latitude, longitude) VALUES (nextval('passes_seq'), 'Albis Pass', 'CH', 793, 47.276, 8.522), /* und so weiter */)Seeding test data in an ordinary SQL-ish fashion

Starting your app app, you are pleased to remember that Quarkus has gone and spun up a local PostgreSQL container for you, and plumbed it into your development environment! Time to add an API and start to push some data in and out.

The API bit

Next up, you go to knock up the HTTP API you designed earlier. This is

very much on-rails stuff, you think, and don’t expect much friction. The GetAll is straighforward enough, although

you have to re-check some examples to remember mutiny, the reactive

programming library used by Quarkus, works:

@Path("/api/v1/passes")public class PassResource {

@GET @Produces(MediaType.APPLICATION_JSON) public Uni<List<PassDTO>> getAll() { return Pass.<Pass>findAll().list() // panache, making data access easy .map(passes -> passes.stream() .map(pass -> new PassDTO(pass.id, pass.name, pass.country.getName(), pass.ascent, pass.latitude, pass.longitude)) // Functional programming in Java can never be beautiful, // you sadly remind yourself as you type the necessary // incantation .collect(Collectors.toList())); }Implementing a GetAll using Mutiny and Panache

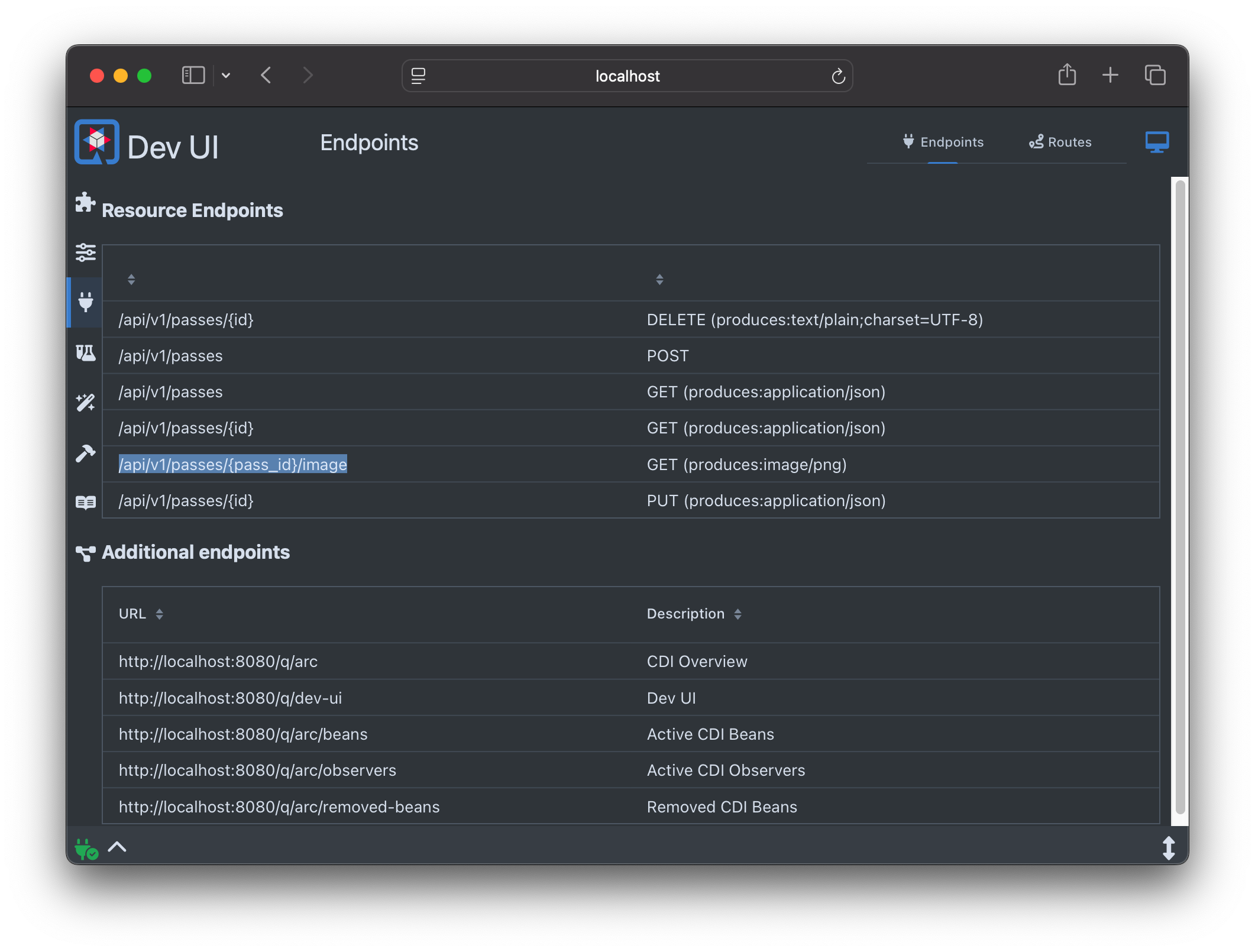

Once you’ve remember the shape of the necessary incantations, you quickly finish off the other endpoints, and drop a stub in place for the image endpoint for good measure. Jumping back into your Quarkus dev console, you can see your new endpoints:

You knock out some quick curls to make sure it works, then encode them into unit tests for good measure, and again

relishing the quarkus magic that provides you an actual running database without any extra ado for your test

environment:

@QuarkusTestpublic class PassResourceTest {

@BeforeEach public void prepareDb() { // Setup some test data }

@Test public void testUpdatePass() { given() .contentType("application/json") .body("{\"name\":\"Updated Pass\",\"ascent\":1600,\"country\":\"Switzerland\"}") .when().put("/api/v1/passes/2") .then() .statusCode(200) .body("name", is("Updated Pass")) .body("country", is("Switzerland")) .body("ascent", is(1600)); }

// ...}The Kafka bit

If Franz Kafka had known about message brokers, The Metamorphosis would have been about a dev waking up one morning to find their streams mysteriously unpartitioned, and an ops team demanding the submission of arcane Jiras to resolve it, you think to yourself, as you peruse the Kafka documentation once again.

Fortunately in this case you don’t actually have to run this thing in production, which means that paritioning, zookeeper, and rentention policies are all a tomorrow problem. Quarkus dev services to the rescue once more! Freed of the need to do any of the hard parts, setting up Kafka is actually kinda easy, you think to yourself:

// An async channel to write our events out @Channel("passes") MutinyEmitter<PassEvent> passEventEmitter;

// Emits an event (Created / Updated / Deleted) for a given pass private Uni<Void> emitEvent(PassEvent.EventType et, long id, String name, String countryName, int ascent) { PassEvent event = new PassEvent(id, name, countryName, ascent, et); return passEventEmitter.send(event); }

// Encouraged by a sense of _radical transparency_, you decide // to highlight the gnarliest-looking change to your REST API to // support emitting events - the PUT method. // // "Nothing some inline comments won't fix!" you mutter to yourself, // as you set out to diminish the horror. @PUT @Path("/{id}") @Produces(MediaType.APPLICATION_JSON) public Uni<Response> updatePass(@PathParam("id") Long id, PassDTO passDTO) { return Pass.<Pass>findById(id) .onItem().ifNotNull().transformToUni(pass -> Country.find("name", passDTO.country).firstResult() // Does the country exist? .onItem().ifNotNull().transformToUni(country -> { // If it does, chuck it on the pass pass.name = passDTO.name; pass.ascent = passDTO.ascent; pass.country = (Country) country;

return Panache.withTransaction(() -> pass.persist() // try to write the pass .onItem().transformToUni(updated -> // Map the saved model back out to our DTO emitEvent(PassEvent.EventType.Updated, pass.id, pass.name, pass.country.getName(), pass.ascent) .replaceWith(Response.ok( new PassDTO(pass.id, pass.name, pass.country.getName(), pass.ascent, pass.latitude, pass.longitude)) .build()))); }) // If we can't find the country or the pass, return sensible errors .onItem().ifNull().failWith(() -> new WebApplicationException("Country not found", Response.Status.BAD_REQUEST))) .onItem().ifNull().failWith(() -> new WebApplicationException("Pass not found", Response.Status.NOT_FOUND)); }So … that was not as aesthetically pleasing as you remember. But also - you told yourself you’d do everything async, and you like writing in a functional style, so you have to consider the possibility you are your own worst enemy here. Besides, your blog supports horizontal scrollbars.

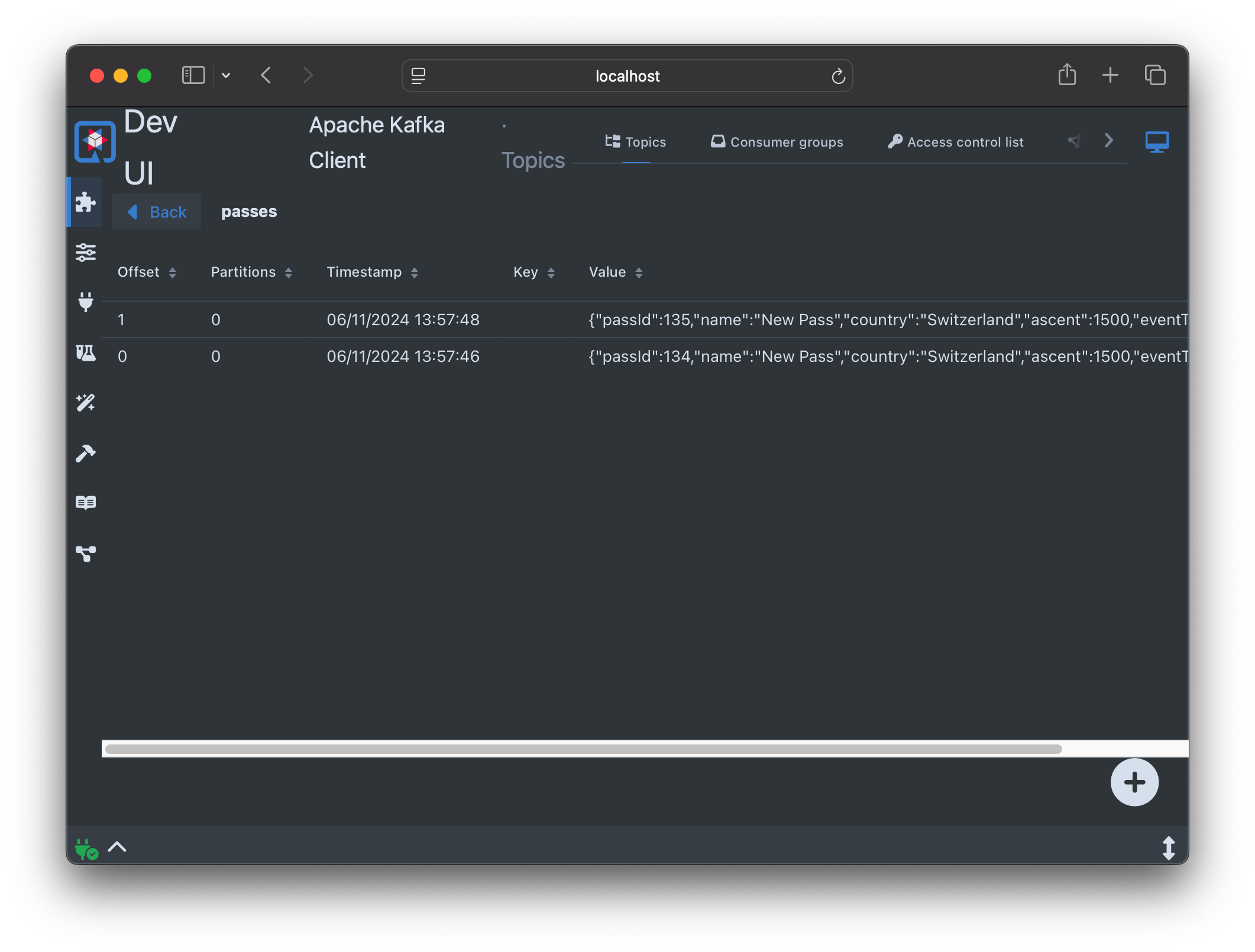

Regardless - you plough on, adding events to the other mutating handlers - and are pleased to discover, once again, that everything has been automagically plumbed up, and you can even see your messages flying through on the dev console!

The Parallel HTTP Client bit

Next, you remember you promised to chuck in an API to render aerial images of the passes. Something about “concurrency” and “upstream HTTP requests”. You spent a bunch of time doing geospatial in the past, and a vague sense of dread creeps down your spine. Coordinate systems? WGS84? Nah - that’s all noise; let’s just munge some OpenStreetMaps tiles together and try and make sure they probably mostly cover the coordinates given. After literal hours of messing about in a an idiotic coordinate system, you produce something that roughly works. Aware that your colleagues already think you might be taking this thing a little too seriously, you choose to only highlight the bits that are relevant for the PoC - making HTTP calls, and dealing with concurrency - whilst obscuring the rest of the sausage-making infrastructure.

First, we need an interface to represent the OSM API that we can inject into our code:

@Path("/")@RegisterRestClientinterface OsmTileClient {

@GET @Path("{z}/{x}/{y}.png") @ClientHeaderParam(name = "User-Agent", value = "passes-java-demo") Uni<byte[]> fetchTile(@PathParam("z") int zoom, @PathParam("x") int x, @PathParam("y") int y);}Then, we need to use it:

@ApplicationScopedpublic class OsmImageGenerator {

// ....

// Grab our REST client @RestClient OsmTileClient osmTileClient;

/** * Generates an aerial image of a given area based on latitude, * longitude, radius (km), and image size. * * @return A `Uni<byte[]>` containing the generated image as a PNG byte array. */ @WithSpan // We'll come back to this later! public Uni<byte[]> generateImage(double lat, double lon, double radiusKm, int sizePixels) { LOG.info("Starting image generation...");

LatLong center = new LatLong(lat, lon);

return Uni.createFrom().item(() -> computeBoundingBox(center, radiusKm, sizePixels)) .flatMap(tileBox -> fetchTilesAsync(tileBox) .flatMap(tiles -> assembleImage(tileBox, tiles, sizePixels))); }

/** Fetches all OSM times referenced in the given tileBox async in parallel **/ private Uni<Map<TileCoordinate, BufferedImage>> fetchTilesAsync(ConstrainedTileBox tileBox) { Set<TileCoordinate> coordinates = generateTileCoordinates(tileBox.getTileBox());

return Multi.createFrom().items(coordinates.stream()) .onItem().transformToUniAndMerge(tile -> osmTileClient.fetchTile(tile.z, (int) tile.x, (int) tile.y) .onItem().transform(tileBytes -> { try { return Map.entry(tile, ImageIO.read(new ByteArrayInputStream(tileBytes))); } catch (IOException e) { throw new RuntimeException("Failed to decode tile image", e); } })) .collect().asMap(Map.Entry::getKey, Map.Entry::getValue); }

/** Lots of other nasty stuff down here, you think, pressing the delete key before submitting the file to your Quarkus writeup. **/The Observability Bit

Once again, you think to yourself, Quarkus has made this all a little too easy -

a quick quarkus extension add opentelemetry, a couple of properties dropped into the configuration, and Bob is your

metaphorical uncle.

Reflecting back on that one time you worked for an observability company, you remember the three signals - logs / traces / metrics. Aware that you often want to customize these things, and the ability to do so is something worth testing out for this stack, you add a custom span to your telemetry, to capture the image generation:

@WithSpan public Uni<byte[]> generateImage(double lat, double lon, double radiusKm, int sizePixels) { LOG.info("Starting image generation...");Adding a custom span to capture imageGeneration

And a metric to capture request rates against the different endpoints:

// Our counter LongCounter apiCallCounter;

// Inject the meter provider into the pass public PassResource(Meter meter) { apiCallCounter = meter.counterBuilder("get-pass-calls") .setDescription("Calls made to fetch from the pass APIs") .setUnit("invocations") .build(); }

// Then, bump the counter for each request type, e.g.

@GET @Produces(MediaType.APPLICATION_JSON) public Uni<List<PassDTO>> getAll() {

apiCallCounter.add(1, Attributes.of(AttributeKey.stringKey("api"), "getPasses")); // ...Counting request rates per API resource

These days everything supports OTLP, so you point your app at Datadog’s agent. This is going to make it easy to compare performance beween stacks, you think to yourself!

But is it fast?

You have been on the internet for long enough to know two things to be true:

- When it comes to battles of the language, everyone freaks out about throughput - how many requests-per-second (RPS) you can push through your technology-of-choice

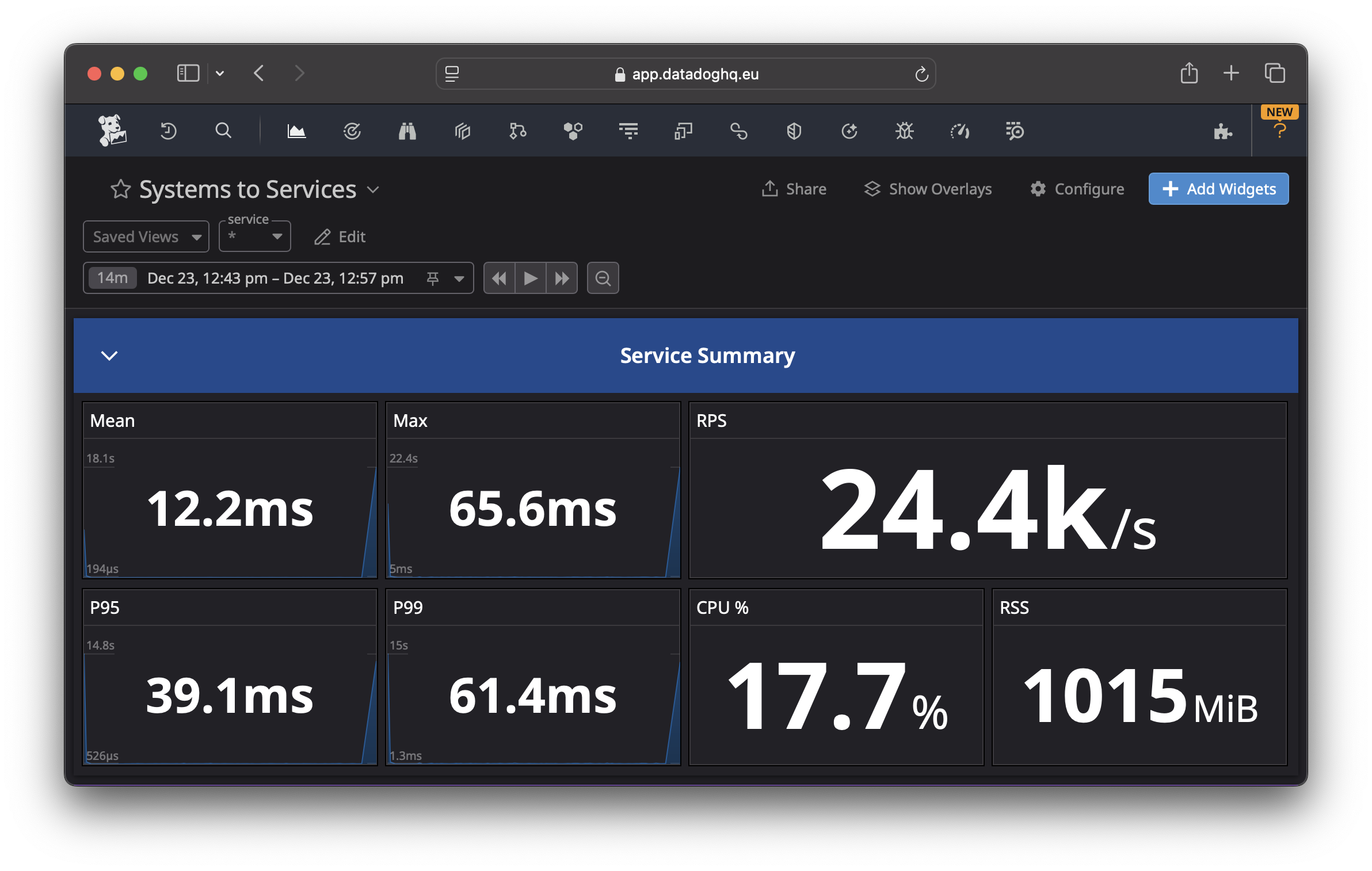

- Comparing throughput is extremely frought

So, you figure, its important to show some attention to performance, but not freak out about it. In addition to your request rate and process metric monitoring in Datadog, you set up a quick supporting environment for the different pieces you need - a local PostgreSQL install - uncontainerized, an instance of Kafka, and a basic Locust test script for both the JSON-y API and the image API. To keep things simple you’ll run this all on your rather overpowered Macbook and keep an eye on the usage of the supporting services to make sure that they aren’t causing performance bottlenecks.

from locust import HttpUser, TaskSet, task

class UserBehavior(TaskSet): @task(1) def example_task(self): self.client.get("/api/v1/passes/10") # self.client.get("/api/v1/passes/10/image")

class WebsiteUser(HttpUser): tasks = [UserBehavior]A simple locustfile to load test the API

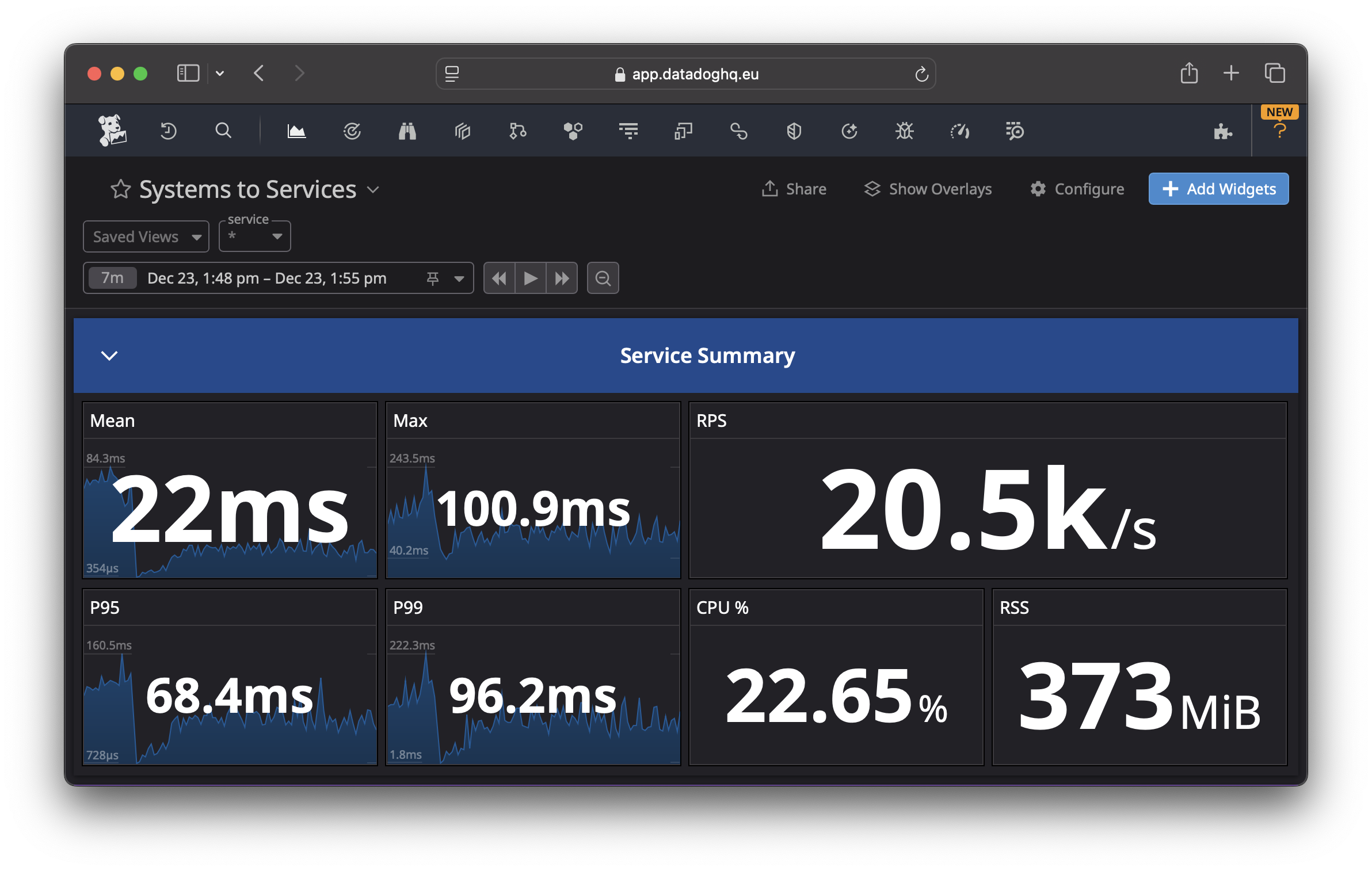

And with that, we have load test results for the JVM:

And, GraalVM:

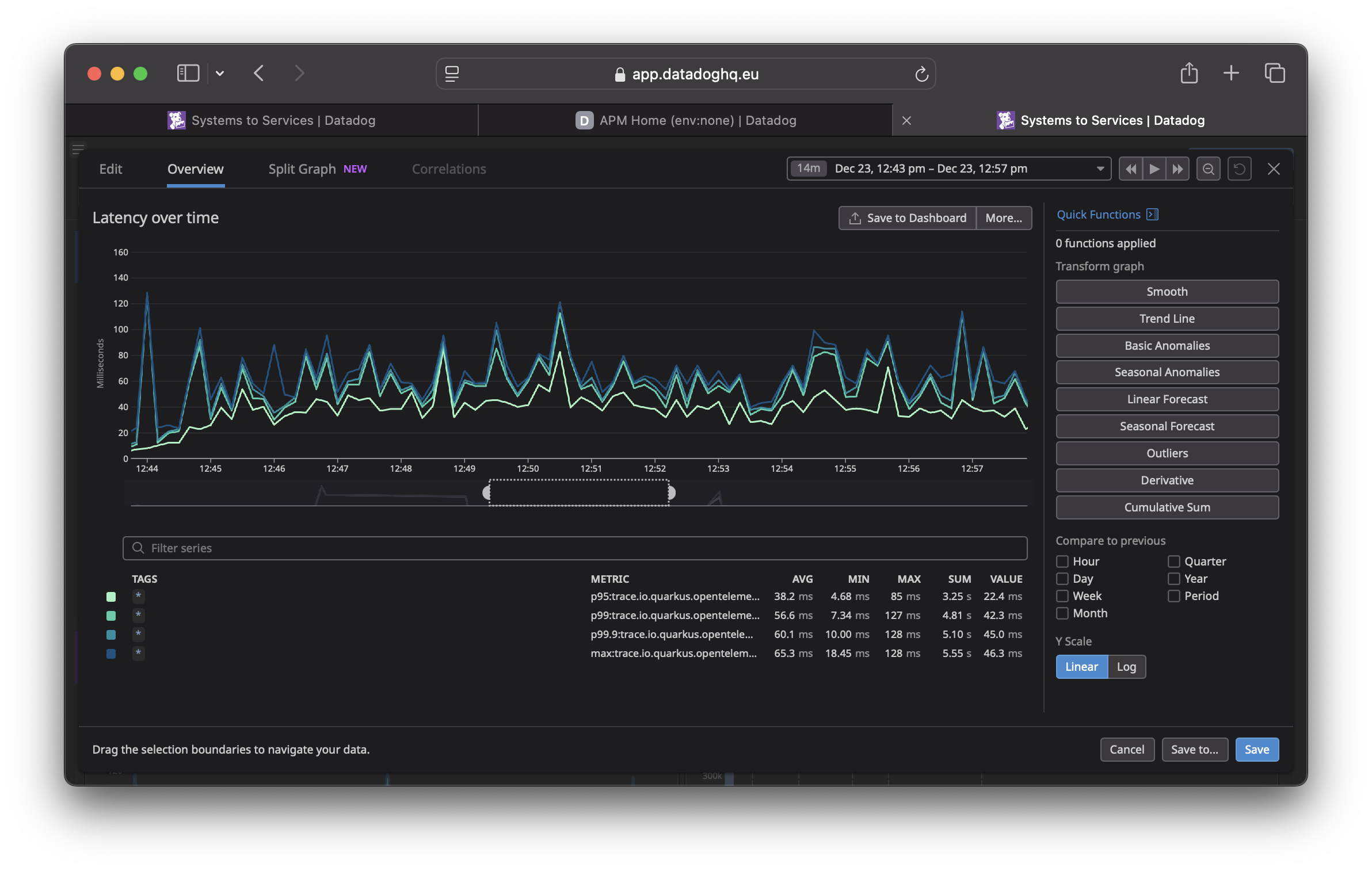

In both cases, the latency-over-time looks like this - very “steady state”-ish:

So - we have a baseline! About 20k requests per second, and somewhere under 1 GiB of memory usage. Interestingly enough, a Graal native image is slightly slower than the JVM - both in terms of request latency and throughput - but uses dramatically less RAM.

To put that in context, that native-image build took 90s, in comparison to 10s for the JVM build. You remind yourself

that comparing performance numbers is a slippery slope, but feel like looking at these numbers, you’d probably only

bother with native-image for this workload if memory consumption was a driving constraint.

The “Colleagues” Pass Judgement

With great anticipation at the unveiling of the first of your three-part masterpiece proof-of-concept series, you

drop a link to your PoC into the team chat. Once again, the engagement you had visualised does not appear. Someone

posts a snarky meme implying Java users are boomers. Someone else observes that those request rates are

“actually kinda good?!”. As you let the praise wash over you, you crack open a new terminal and type go mod init.

The terror sets in.